In a modern world where people are ready to give up on their privacy in order to gain some comfort. How exposed are we? How are these companies using our data to make things more comfortable and personal? And how much comfort is too much?

We shall seek answer to these questions in the following article.

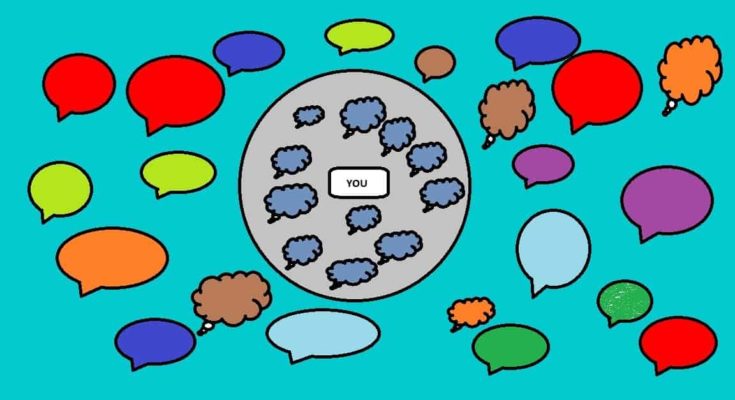

Filter bubble is a term we used to represent the isolation from views that are opposite to that of a person. This occurs when a person only listens to similar views and refuses to accept an opinion which is different than his/her own.

In this article I shall explain how the uncontrolled personalization of content by the use of Artificial intelligence leads to the formation of filter bubbles and how harmful are they really.

To understand filter bubbles and how they are created, we have to have a closer look at what creates them.

Unlike popular beliefs, filter bubbles are NOT the creation of artificial intelligence. We humans have always lived in types of social filter bubbles. We like making friends that hold the same beliefs as ours, we like interacting with people of similar intellectual tastes.

This is nothing to be outrageous about, it is life…We all want peaceful lives and one way to accomplish this is to never listen to dissenting voices or ‘the opposite view’.

The best way to survive is to avoid unnecessary quarrels, this belief has shaped how we humans choose our social circle for long.

Then what is really wrong with these bubbles?

Yes, filter bubbles are natural, but too much of anything is bad.

Today with the use of technology which can guess ‘what you may like to hear’ we are witnessing a possibility of mass manipulation.

Most of the innocent looking algorithms which are used to guess what a user may like read, depend on following methods.

User-history based filtering

This means after some time the algorithms will start pushing you into an ‘echo chamber’. Where people only see posts that are based on what they have seen and liked before. Thereby reinforcing their beliefs to an extent they start believing only they hold the correct opinion.

Collaborative filtering

This is a method that first observes and evaluates patterns of content consumption between ‘similar types of users’. Then predicts the content which can be recommended to a specific user based on nearest neighbor classification. Eventually, such filters start pushing a group of people into a specific filter category, where they all can be recommended similar contents based on their watch history.

The harm of too much personalized content.

This still doesn’t seem to be of enormous harm alone. But, when we combine this effect with the following fact that the articles with opposite views are not shown to you as ‘you may not like them’ makes it scary.

Soon a person is effectively shut-off from dissenting views and gets accustomed to reading similar posts all the time. Hence, these algorithms will show you what you want to read.

This can make massive impact on a person’s mind. He/She may soon get intolerant toward the opposite views and maybe they will even start thinking that opposing views don’t make any sense and are outlandish.

Thus, a supposedly helpful algorithm that was meant to show what you may like and make life more easy and comfortable can soon become a weapon which can be used against you.

How can someone use filter bubbles against you?

Filter bubbles can be used to feed a particular opinion to the masses. This can be easily accomplished by showing you targeted articles and posts. After a certain time, these algorithms can be used to brainwash people.

Excessive social media campaigning has already been doubted to sway election results.

Sensitive population which is prone to over-attachment of emotions with an organization can be manipulated into believing that their organization is somehow threatened by outside forces.

This is very easy to achieve. Just feed a particular opinion regularly and then suddenly break the filter bubble by making people read opposing views. This will show, how biased people can become by reading targeted posts.

Filter bubbles are the most effective and silent weapon a person possess in today’s digital and comfortable world.

How to avoid filter bubbles?

The only way to break these bubbles is to NOT allow these search engines to customize your content. One way of doing so is not liking every article you read. Only like those articles you think are exceptionally well written and tell the story of every possible perspective.

Although this method of selective approval of posts is effective, you are only delaying the process of filter bubble formation. Eventually you will be pushed into one of those echo chambers.

Another way to break these bubbles is to go out of your way and read opposing views to yours. This will not only break these bubbles they will make your mind flexible to accept the opposing views.

Evanesco…